As Ji Hyun Nam slowly tosses a stuffed cat toy into the air, a real-time video captures the playful scene — from around a corner. With further refinements, the technology could find uses in search-and-rescue, defense and medical imaging. (Caution: Video contains flashing lights, which may be a problem for some people, including those with photosensitive epilepsy or a history of migraines and headaches.)

As Ji Hyun Nam slowly tosses a stuffed cat toy into the air, a real-time video captures the playful scene at a 20th century webcam clip — a mere five frames per second.

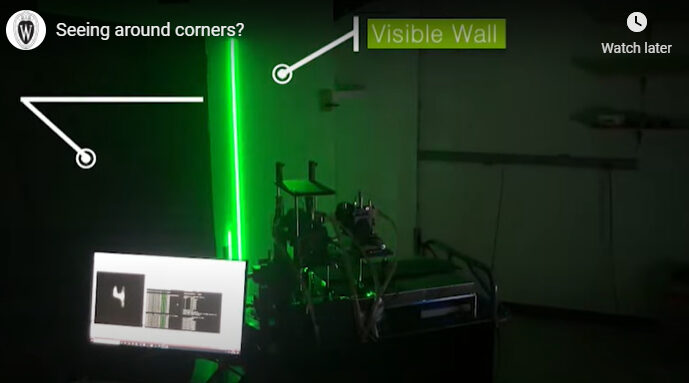

The twist? Nam is hidden around the corner from the camera. The video of the stuffed animal was created by capturing light reflected off a wall to the toy and bounced back again in a science-fiction-turned-reality technique known as non-line-of-sight imaging.

And at five frames per second, the video is a blazing fast improvement on recent hidden-scene imaging that previously took minutes to reconstruct a stationary image.

The new technique uses many ultra-fast and highly sensitive light sensors and an improved video reconstruction algorithm to greatly speed the time it takes to display the hidden scenes. The University of Wisconsin–Madison researchers who created the video say the new advance opens up the technology to affordable, real-world applications of both near and distant scenes.

Those future applications include disaster relief, medical imaging and military uses. The technique could also find use outside of around-the-corner imaging, such as improving autonomous vehicle imaging systems. The work was funded by the U.S. Defense Department’s Advanced Research Projects Agency (DARPA) and the National Science Foundation.

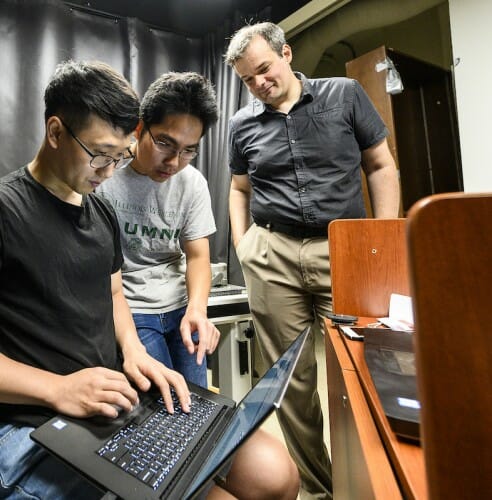

Graduate students Ji Hyun Nam (left) and Toan Le work with assistant professor and principal investigator Andreas Velten in the Computational Optics lab. PHOTO: BRYCE RICHTER

Andreas Velten, a professor of biostatistics and medical informatics at the UW School of Medicine and Public Health, and his team published their findings Nov. 11 in Nature Communications. Nam, a former Velten lab doctoral student, is the first author of the report. UW–Madison researchers Eric Brandt and Sebastian Bauer, along with collaborators at the Polytechnic University of Milan in Italy, also contributed to the new research.

Velten and his former advisor first demonstrated non-line-of-sight imaging a decade ago. Similar to other light- or sound-based imaging, the technique captures information about a scene by bouncing light off of a surface and sensing the echoes coming back. But to see around corners, the technique focuses not on the first echo, but on reflections of those echoes.

“It’s basically echolocation, but using additional echoes — like with reverb,” says Velten, who also holds an appointment in the Department of Electrical and Computer Engineering.

In 2019, Velten’s lab members demonstrated that they could take advantage of existing imaging algorithms by reconsidering how they approach the math of the system. The new math allowed them to use a laser rapidly scanning against a wall as a kind of “virtual camera” that provides visibility for the hidden scene.

The algorithms that reconstruct the scenes are fast. Brandt, a doctoral student in the lab of study co-author Eftychios Sifakis, further improved them for processing hidden-scene data. But data collection for earlier non-line-of-sight imaging techniques was painfully slow, in part because light sensors were often just a single pixel.

“Can you imagine taking a picture around a corner simply on your phone? There are still many technical challenges, but this work brings us to the next level and opens up the possibilities!”

Ji Hyun Nam

To advance to real-time video, the team needed specialized light sensors — and more of them. Single-photon avalanche diodes, or SPADs, are now common, even finding their way into the latest iPhones. Able to detect individual photons, they provide the sensitivity needed to capture very weak reflections of light from around corners. But commercial SPADs are about 50 times too slow.

Working with colleagues in Italy, Velten’s lab spent years perfecting new SPADs that can tell the difference between photons arriving just 50 trillionths of a second apart. That ultra-fast time resolution also provides information about depth, allowing for 3D reconstructions. The sensors can also be turned off and on very quickly, helping distinguish different reflections.

“If I send a light pulse at a wall, I get a very bright reflection I have to ignore. I need to look for the much weaker light coming from the hidden scene,” says Velten.

By using 28 SPAD pixels, the team could collect light quickly enough to enable real-time video with just a one-second delay.

The resulting videos are monochrome and fuzzy, yet able to resolve motion and distinguish objects in 3D space. In successive scenes, Nam demonstrates that the videos can resolve foot-wide letters and pick out human limbs during natural movements. The projected virtual camera can even accurately distinguish a mirror from what it is reflecting, which is technologically challenging for a real camera.

“Playing with our NLOS (non-line-of-sight) imaging setup is really entertaining,” says Nam. “While standing in the hidden scene, you can dance, jump, do exercises and see video of yourself on the monitor in real-time.”

Watch the full version of the researchers’ video, from which the clip above was excerpted

While the video captures objects just a couple meters from the reflecting wall, the same techniques could be used to image objects hundreds of meters away, so long as they were large enough to see at that distance.

“If you’re in a dark room, the size of the scene isn’t limited anymore,” says Velten. Even with room lights on, the system can capture nearby objects.

Although the Velten team uses custom equipment, the light sensor and laser technology required for around-the-corner imaging is ubiquitous and affordable. Following further engineering refinements, the technique could be creatively deployed in many areas.

“Nowadays you can find time-of-flight sensors integrated in smartphones like iPhone 12,” says Nam. “Can you imagine taking a picture around a corner simply on your phone? There are still many technical challenges, but this work brings us to the next level and opens up the possibilities!”

THIS WORK WAS SUPPORTED BY THE U.S. DEFENSE DEPARTMENT’S ADVANCED RESEARCH PROJECTS AGENCY (DARPA) REVEAL PROJECT (GRANT HR0011-16-C-0025), THE NATIONAL SCIENCE FOUNDATION (GRANTS NSF IIS-2008584, CCF-1812944, IIS-1763638, AND IIS-2106768) AND A GRANT FROM UW–MADISON’S DRAPER TECHNOLOGY INNOVATION FUND. THE TEAM ALSO PARTNERED WITH D2P AND RECEIVED WARF ACCELERATOR FUNDING, ALONG WITH OTHER PROGRAM SUPPORT FROM WARF.